In this post I would be discussing the algorithm for linear regression and how to run it using Python’s Theano package. Though linear regression is relatively simple problem, Theano is a package that has been extensively used for machine learning purposes and this example was chosen primarily to get familiar with Theano.

Theano install instructions

For those who find the above set of instructions tiresome, install the Anaconda Python distribution which installs Theano as well as the prerequisite python packages.

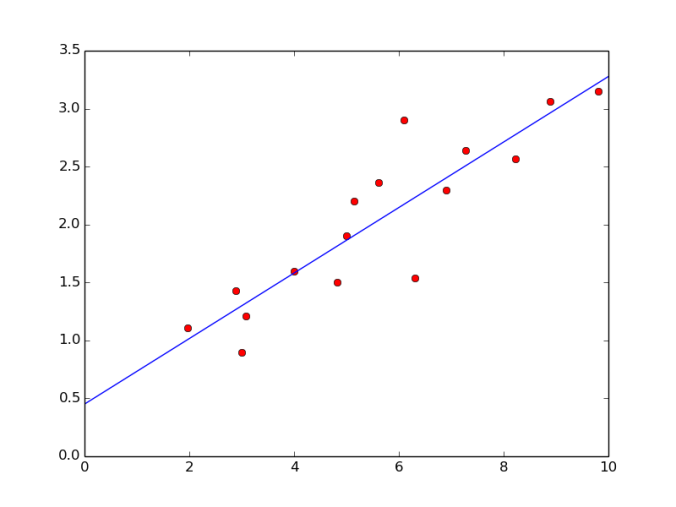

For this example we will be using linear regression to determine the coefficients of a line that best fits a given set of points.That is, given two variables that are linearly related, we “learn” the equation that best describes the relationship between the two variables.

The Data

We will be using a set of points which will be stored as vectors X and Y.The points are chosen such that there exists some sort of a linear relationship between X and Y.

import numpy import theano import theano.tensor as T import matplotlib.pyplot as plt rng = numpy.random #Training Data X = numpy.asarray([3,4,5,6.1,6.3,2.88,8.89,5.62,6.9,1.97,8.22,9.81,4.83,7.27,5.14,3.08]) Y = numpy.asarray([0.9,1.6,1.9,2.9,1.54,1.43,3.06,2.36,2.3,1.11,2.57,3.15,1.5,2.64,2.20,1.21])

Since its a line equation that we are trying to fit, we have two coefficients :

i) Slope m

ii) Intercept c.

We start by randomly initializing the coefficients. m and c are initialized as shared variables in Theano. Shared variables are hybrid variables whose values are shared by multiple functions. Another aspect of shared variables is that their values are stored in the shared memory of GPU’s, allowing for faster access during computation. Since m and c are going to be extensively used during computations, we initialize them as shared variables.

Theano deals with symbolic variables and expressions. These symbolic variables are different from the variables that we usually define in python. The use of symbolic variables allows Theano to achieve higher computation speeds.

We define x and y as two symbolic variables.

X.shape[0] gives the number of elements in the vector X.

m_value = rng.randn()

c_value = rng.randn()

m = theano.shared(m_value,name ='m')

c = theano.shared(c_value,name ='c')

x = T.vector('x')

y = T.vector('y')

num_samples = X.shape[0]

Defining the Cost function

Every machine learning model has a cost parameter which it tries to minimize. The cost parameter is essentially the cumulative error predicted by the model for the current set of coefficients. During training, the model tries to minimize the cost in order to better fit the training data. The cost definition varies with the model as well as the application.

For our case, we take the cumulative mean square error (MSE) of the predicted value and the true value as the cost parameter.

prediction = T.dot(x,m)+c cost = T.sum(T.pow(prediction-y,2))/(2*num_samples)

We multiply all the input values with slope m and add intercept c to get the value predicted by the current model. For calculating the cost, we derive the difference between the predicted value and the true value ( prediction – Y ), square and take the sum of it ( T.sum(T.pow(prediction – Y,2)) ). We then divide the sum by the total number of points ( num_samples ).

Optimization

Optimizitation is achieved through the Gradient Descent algorithm.

One of the advantages of Theano is that it allows for symbolic differentiation. This makes the process of calculating the gradient extremely easy. gradm is cost differentiated w.r.t m and gradc is cost differentiated w.r.t c.

We define two functions train and test. train function takes as input X and Y and trains the model to minimize the cost. The actual optimization is done by the updates parameter of the train function. Everytime the train function is called, gradm and gradc are calculated and the values of m and c are replaced by m-learning_rate*gradm and c-learning_rate*gradc respectively. The test function gives the value predicted by the trained model for any input x.

We train the model for 10000 steps steps with a learning rate of 0.01. Since m and c are shared variables, we need to use get_value() to access their values.

gradm = T.grad(cost,m)

gradc = T.grad(cost,c)

learning_rate = 0.01

training_steps = 10000

train = theano.function([x,y],cost,updates = [(m,m-learning_rate*gradm),(c,c-learning_rate*gradc)])

test = theano.function([x],prediction)

for i in range(training_steps):

costM = train(X,Y)

print(costM)

print("Slope :")

print(m.get_value())

print("Intercept :")

print(c.get_value())

Final Code

import theano

import numpy

import theano.tensor as T

import matplotlib.pyplot as plt

rng = numpy.random

#Training Data

X = numpy.asarray([3,4,5,6.1,6.3,2.88,8.89,5.62,6.9,1.97,8.22,9.81,4.83,7.27,5.14,3.08])

Y = numpy.asarray([0.9,1.6,1.9,2.9,1.54,1.43,3.06,2.36,2.3,1.11,2.57,3.15,1.5,2.64,2.20,1.21])

m_value = rng.randn()

c_value = rng.randn()

m = theano.shared(m_value,name ='m')

c = theano.shared(c_value,name ='c')

x = T.vector('x')

y = T.vector('y')

num_samples = X.shape[0]

prediction = T.dot(x,m)+c

cost = T.sum(T.pow(prediction-y,2))/(2*num_samples)

gradm = T.grad(cost,m)

gradc = T.grad(cost,c)

learning_rate = 0.01

training_steps = 10000

train = theano.function([x,y],cost,updates = [(m,m-learning_rate*gradm),(c,c-learning_rate*gradc)])

test = theano.function([x],prediction)

for i in range(training_steps):

costM = train(X,Y)

print(costM)

print("Slope :")

print(m.get_value())

print("Intercept :")

print(c.get_value())

a = linspace(0,10,10)

b = test(a)

plt.plot(X,Y,'ro')

plt.plot(a,b)

NOTE : X and Y are the input data vectors while x and y are the Theano variables. All the expressions and functions are written in terms of the symbolic variables x and y , while the data vectors X and Y are used while calling the functions.

Thanks, I was looking for something just like this.. is it possible to extend this to multivariate regression, where X is a matrix of variables instead of a single vector X?

LikeLike

Usually in our multivariate regression, all variables that could potentially affect the output are represented as a vector. Assuming the weight vector W and scalar bias value b, we get a scalar output.

If you need to specifically use a matrix for X, then the shapes of the weight W and bias b will also change. Most probably W will be another matrix and b would be a vector. With this configuration, you would probably be getting a vector as the final output.

LikeLike

Nice demonstration (thanks!) , but a couple of minor issues:

– If you have and are using a GPU with Theano it complains about type conversion, unless you put in “allow_input_downcast=True” into the calls to function()

– The plot lines at the bottom are incomplete, and I’m not sure how to fix it so it draws correctly. I couldn’t figure out how the roplot bit works…

LikeLike

b = test(a)

plt.plot(X, Y)

plt.plot(a,b)

plt.show()

LikeLiked by 1 person

how can we test for the test cases now ?

LikeLike

You can test new cases by using the test function that has been defined. if your test cases are present in a variable named “testx”, then the predictions can be made using :

predictions = test(testx)

LikeLike

Thank you so much Roshansanthosh.It worked fine.

how can we train a model which has to show 0 for all numbers below 100 and 1 for all the numbers above 100? that is by setting threshold as 100?

LikeLike